Robots.txt is a tool that allows you to have control over which files are allowed to be indexed by Google.

Enter your website URL to learn more about yours!

There may be pages on your website that you don’t want to index. Robots.txt files give you the option to select the links that you want indexed.

This may help you organise your SEO because you can scrap all of the pages that are invalid.

You can only show the pages that display high quality content to your target audience.

One of the goals is to make sure that your important content is seen by search engines.

Another reason why it’s recommended is because it could prevent unnecessary website crashes if you have a lot of content.

In just two easy steps, you can better understand the effectiveness of your title tags.

You’ll also get tips on how to improve them so that you can create the best possible title tags for your website!

It will say very clearly what a robot.txt file means to a website at the top.

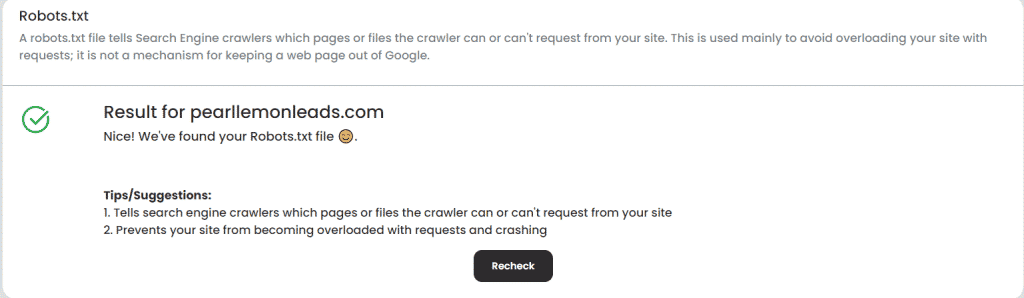

Once you hit check, you should see this page. A successful checkup will look like this:

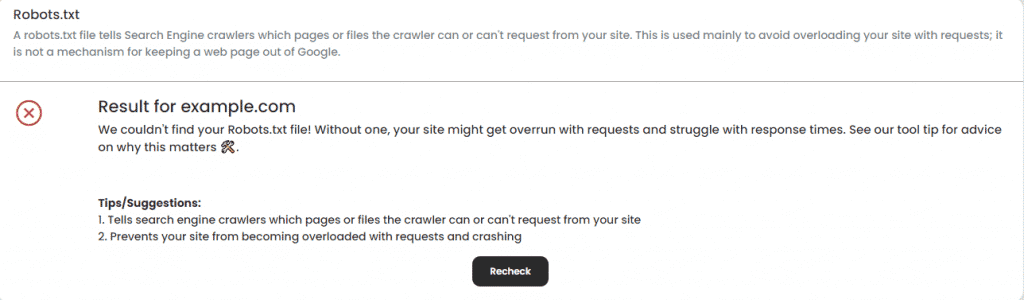

If you want to test another website, simply click recheck. If the result is negative, it will appear like this:

So if you want help with driving more traffic to your page, why not sign up today?

Get access to Serpwizz’s Robots.txt checker tool along with a whole host of other SEO auditing features.

© Copyright • SERPWIZZ • Privacy Policy

© Copyright • SERPWizz • Privacy Policy • Sitemap

Brought to you with ❤️ by Pearl Lemon